One of the biggest challenges in text-to-image generation is composition. You can write a beautifully detailed prompt describing a complex scene, only for the model to ignore key elements or misplace objects.

Omost is an experimental project from Lvmin Zhang that explores using large language models (LLMs) for scene composition in Stable Diffusion pipelines. Rather than relying solely on a single prompt, Omost introduces a Canvas system where a fine-tuned LLM generates a detailed scene composed of global and local components. The components are then used to guide the image generation in a region-aware manner.

This post will be an overview of the project, its novel approach to scene composition, and how it produces more coherent and detailed images. We’ll end with a gallery of examples and a demo.

Note

Throughout this post, I’ll use “the LLM” to refer to the specific language model used, and “SDXL” to refer to the Stable Diffusion pipeline.

Introduction

I was looking into controlled generation and came across Omost while surveying other similar projects. The creator is a Stanford PhD candidate and well-known in the CV and AI communities for Fooocus, Forge, ControlNet (2023 ICCV best paper), and other projects. Omost reminded me of Fooocus in that it could generate beautiful images from simple prompts with no “prompt engineering” required.

To understand how Omost improves upon the default SDXL pipeline, we first need to establish a baseline understanding of how Stable Diffusion works.

Note

Read Hugging Face’s The Annotated Diffusion Model and Jay Alammar’s The Illustrated Diffusion for a more in-depth look at training and inference.

Stable Diffusion

The diffusion process looks like this:

- Start the image with random noise

- Gradually remove the noise

- Stop when the image looks good

The perfect cyberpunk cat (model: Fluently XL)

Stable Diffusion is known as a latent diffusion model or LDM because it operates in a compressed latent space rather than pixel space. In other words, if you are generating a 1024x1024 pixel image, the latent image being denoised by the UNet will be 128x128 pixels (8x reduction). A decoder is responsible for decompressing the denoised latent image.

When prompting Stable Diffusion models, there is a limit to how much information and detail you can provide. This is because SDXL pipelines use two different text encoders from the CLIP and OpenCLIP families.

CLIP

CLIP (contrastive language-image pre-training) accels at tasks like zero-shot image classification. It can tell you if an image looks like a cyberpunk cat, but it can’t explain to you what a cyberpunk cat is. This is because CLIP was trained with a contrastive objective, where it learned to score how similar or different images and captions are. This differs from how a LLM is trained, where it must learn to predict exact sequences of text.

Because CLIP doesn’t understand natural language the same way a LLM does, it can struggle with complex prompts describing very detailed scenes. Certain details will be lost or misinterpreted. Omost aims to circumvent this limitation by having the model focus on specific regions of the image while generating it.

Image Generation

Text generation is fundamentally a sequence modeling problem - predicting the next token given the previous ones. Each new word only depends on what came before it.

Images, however, are 2D structures where each pixel is spatially related to its neighbors in multiple directions. This makes predicting pixels much more complex than words.

Stable Diffusion reduces this complexity by working in a compressed latent space. At each inference step, we are asking the UNet to guess how to make the current working image look more like a cyberpunk cat. This is a much easier problem to solve than predicting all pixels directly.

Note

I will briefly cover diffusion transformers, which essentially reframes the denoising process as a sequence of tokens to predict, towards the end.

Canvas

Omost’s Canvas system is a novel approach to scene composition. LLMs are really good at generating code, particularly Python. The idea was to give the LLM a way to describe an image using a structured format it was already familiar with.

The Canvas system serves as an intermediate representation between the LLM and SDXL. It’s a Python class with two methods: set_global_description and add_local_description. The LLM structures the scene as a series of calls to these methods.

class Canvas:

def set_global_description(

self,

description: str,

detailed_descriptions: list[str],

tags: str,

HTML_web_color_name: str,

):

pass

def add_local_description(

self,

location: str,

offset: str,

area: str,

distance_to_viewer: float,

description: str,

detailed_descriptions: list[str],

tags: str,

atmosphere: str,

style: str,

quality_meta: str,

HTML_web_color_name: str,

):

passThe Canvas system also enables an interactive workflow where you can conversationally ask the LLM to make specific changes to the global or local descriptions. Instead of trying to edit an existing image using something like InstructPix2Pix, you’ll work with the LLM to update the textual descriptions and only regenerate the image once you’re satisfied.

Structured Scene Composition

The Canvas is first divided into a 3x3 grid of regions:

+--------------+--------------+--------------+

| top-left | top | top-right |

+--------------+--------------+--------------+

| left | center | right |

+--------------+--------------+--------------+

| bottom-left | bottom | bottom-right |

+--------------+--------------+--------------+The LLM will choose one of 9 different-sized bounding boxes to represent each local description. It will then position the bounding box in the corresponding region of the Canvas.

This approach was inspired by Region Proposal Networks in the Faster R-CNN paper.

Initial Latent Image

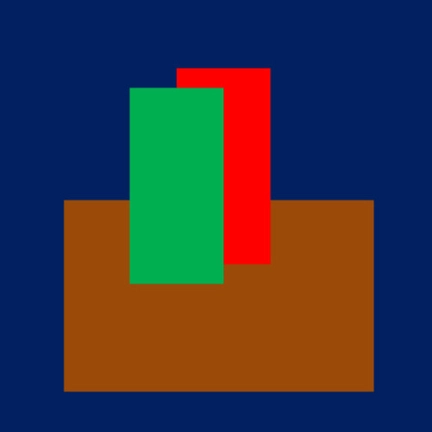

With the Canvas generated, a basic image of colored overlapping rectangles is then created. The rectangles represent the bounding boxes of the local descriptions in the Canvas. For example, a green bottle in front of a red bottle on a wood table in a dark room might result in something like this.

During the denoising process, the green rectangle part of the image would pay attention to the local description of the green bottle, while the red rectangle part would pay attention to the red bottle description, and so on.

In the actual implementation, starting with the initial latent image is optional. When using it, the edges of the rectangles are blurred to encourage smooth transitions between regions. Otherwise, the diffusion process starts with random noise as usual.

Personally, I found that the initial latent image was useful for seeing how the regions were laid out by the LLM, but not helpful for generating the final image.

Sub-prompts

All descriptions in the Canvas are considered sub-prompts. A sub-prompt must be under 75 tokens, although in practice most are only around 40 tokens.

The token limitation is due to CLIP’s input limit of 75 tokens. You see, many Stable Diffusion implementations either truncate prompts at 75 tokens or chunk them into vectors and average them, which can lead to embeddings that don’t make sense depending on where the splits occured. By keeping the sub-prompts small, we ensure no information loss.

The sub-prompts and tags are combined using a greedy merging strategy to efficiently pack them into chunks known as bags. Each bag is a collection of descriptions that, when concatenated, fits just under the 75-token limit.

Attention

Omost replaces SDXL’s default attention with two custom processors that handle attention differently.

The OmostSelfAttnProcessor implements standard self-attention. Each token can attend to all other tokens, allowing the model to capture relationships between different parts of the image.

The OmostCrossAttnProcessor handles attention between image tokens and text embeddings in a region-aware manner. Region masks are resized to match the hidden state dimensions. The masks are scaled by (H * W) / sum(masks) to maintain proper attention distribution. Attention weights outside the mask are set to negative infinity, effectively zeroing them out. The final attention is computed using these masks and scaled weights. This approach ensures that each region’s tokens primarily attend to their corresponding text descriptions while still maintaining awareness of the global context.

Putting It All Together

When generating an image with Omost:

- The LLM generates a scene description using the Canvas API.

- The

Canvasis converted to an initial latent image and a set of region-specific descriptions. - The custom attention processors ensure each region attends to its own description.

- The image is gradually denoised using the Karras schedule.

Diffusion Transformers

Earlier in the year, Stability released Stable Diffusion 3 (SD3). Last month, Black Forest Labs released FLUX.1. These are diffusion transformers or DiT. Hugging Face wrote a blog post on SD3 that discusses the sequence modeling approach and flow-matching objective.

Architecturally, both use an additional large text encoder (Google T5 XXL) that is able to understand more complex prompts than CLIP. Instead of a UNet, a transformer model is used. These models have state-of-the-art prompt adherance, but are slower and more expensive to run than SDXL.

Omost incorporates a language model (the LLM), but indirectly. This decoupling also means you could run the LLM in the cloud and SDXL locally.

Examples

For each example, I generated a baseline image using the latest RealVis XL. This way we can see if Omost is able to take the same input (prompt, seed, steps, CFG) and generate a better looking image. The baselines are on the left.

A lighthouse on a cliff at sunset.

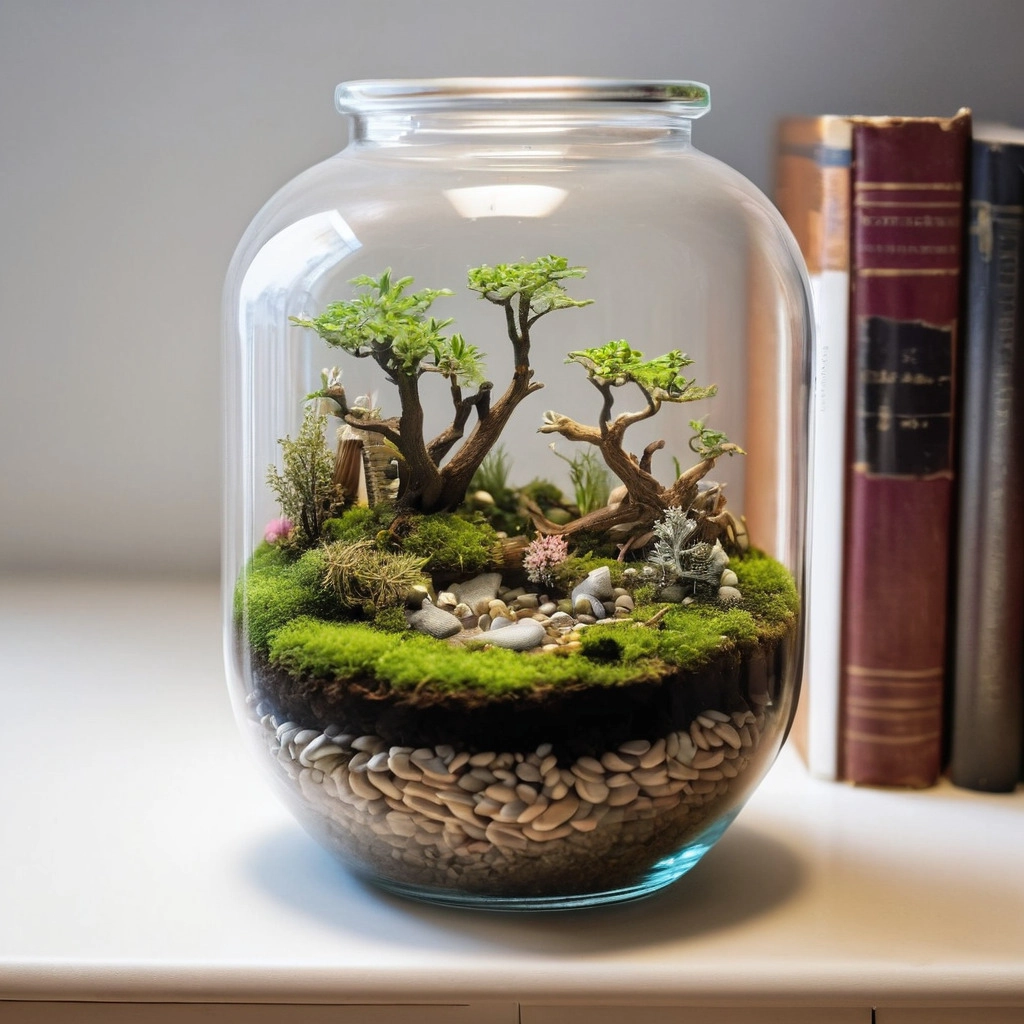

A miniature ecosystem in a glass container on a bookshelf.

A latte with a vibrant nebula swirling in the milk.

Conclusion

Omost is a powerful image generation tool. It can create detailed and coherent scenes from simple prompts. The Canvas system provides an interactive interface for editing and refining the scene description, while the custom attention processors ensure that each region attends to its own description.

Demo

Ready to take it for a spin? Use the embed below or launch the full version. You can also follow me on Bluesky and GitHub for more content.